handle boundary region and multiple processes More...

#include <DomainDecompBase.h>

Public Member Functions | |

| DomainDecompBase () | |

| The Constructor determines the own rank and the number of the neighbours */. | |

| ~DomainDecompBase () override | |

| The Destructor finalizes MPI. | |

| virtual void | readXML (XMLfileUnits &xmlconfig) |

| void | exchangeMolecules (ParticleContainer *moleculeContainer, Domain *domain) |

| exchange molecules between processes More... | |

| virtual void | exchangeForces (ParticleContainer *moleculeContainer, Domain *domain) |

| Exchanges forces at the domain boundaries if it's required by the cell container. More... | |

| virtual int | getNonBlockingStageCount () |

| virtual bool | queryBalanceAndExchangeNonBlocking (bool forceRebalancing, ParticleContainer *moleculeContainer, Domain *domain, double etime) |

| Checks whether the balance and exchange step can be performed non-blocking. More... | |

| virtual void | balanceAndExchange (double lastTraversalTime, bool forceRebalancing, ParticleContainer *moleculeContainer, Domain *domain) |

| balance the load (and optimize communication) and exchange boundary particles More... | |

| virtual bool | procOwnsPos (double x, double y, double z, Domain *domain) final |

| find out whether the given position belongs to the domain of this process More... | |

| void | getBoundingBoxMinMax (Domain *domain, double *min, double *max) |

| get the minimum and maximum coordinate of the bounding box of this process' domain More... | |

| virtual double | getBoundingBoxMin (int dimension, Domain *domain) |

| get the minimum of the bounding box of this process' domain in the given dimension (0,1,2) More... | |

| virtual double | getBoundingBoxMax (int dimension, Domain *domain) |

| get the maximum of the bounding box of this process' domain in the given dimension (0,1,2) More... | |

| virtual void | printDecomp (const std::string &filename, Domain *domain, ParticleContainer *particleContainer) |

| writes information about the current decomposition into the given file More... | |

| virtual int | getRank () const |

| returns the own rank More... | |

| virtual int | getNumProcs () const |

| returns the number of processes More... | |

| virtual void | barrier () const |

| synchronizes all processes More... | |

| virtual double | getTime () const |

| returns the time in seconds since some time in the past | |

| virtual unsigned | Ndistribution (unsigned localN, float *minrnd, float *maxrnd) |

| returns total number of molecules More... | |

| virtual void | assertIntIdentity (int IX) |

| checks identity of random number generators More... | |

| virtual void | assertDisjunctivity (ParticleContainer *moleculeContainer) const |

| double | getIOCutoffRadius (int dim, Domain *domain, ParticleContainer *moleculeContainer) |

| returns an cutoff radius for a dimension for a global linked cells datastructure More... | |

| void | writeMoleculesToMPIFileBinary (const std::string &filename, ParticleContainer *moleculeContainer) const |

| appends molecule data to the file. The format is the same as that of the input file This version uses, MPI IO. More... | |

| void | writeMoleculesToFile (const std::string &filename, ParticleContainer *moleculeContainer, bool binary=false) const |

| appends molecule data to the file. The format is the same as that of the input file If MPI is enabled and binary files are supposed to be written this function will call writeMoleculesToMPIFileBinary(). Otherwise, to ensure that not more than one process writes to the file at any time, there is a loop over all processes with a barrier in between. More... | |

| void | updateSendLeavingWithCopies (bool sendTogether) |

| bool | sendLeavingWithCopies () const |

| virtual void | collCommInit (int numValues, int key=0) |

| has to call init method of a CollComm class More... | |

| virtual void | collCommFinalize () |

| has to call finalize method of a CollComm class More... | |

| virtual void | collCommAppendInt (int intValue) |

| has to call appendInt method of a CollComm class More... | |

| virtual void | collCommAppendUnsLong (unsigned long unsLongValue) |

| has to call appendUnsLong method of a CollComm class More... | |

| virtual void | collCommAppendFloat (float floatValue) |

| has to call appendFloat method of a CollComm class More... | |

| virtual void | collCommAppendDouble (double doubleValue) |

| has to call appendDouble method of a CollComm class More... | |

| virtual void | collCommAppendLongDouble (long double longDoubleValue) |

| has to call appendLongDouble method of a CollComm class More... | |

| virtual int | collCommGetInt () |

| has to call getInt method of a CollComm class More... | |

| virtual unsigned long | collCommGetUnsLong () |

| has to call getUnsLong method of a CollComm class More... | |

| virtual float | collCommGetFloat () |

| has to call getFloat method of a CollComm class More... | |

| virtual double | collCommGetDouble () |

| has to call getDouble method of a CollComm class More... | |

| virtual long double | collCommGetLongDouble () |

| has to call getLongDouble method of a CollComm class More... | |

| virtual void | collCommAllreduceSum () |

| has to call allreduceSum method of a CollComm class (none in sequential version) More... | |

| virtual void | collCommAllreduceSumAllowPrevious () |

| has to call allreduceSum method of a CollComm class (none in sequential version), allows for values of previous iteration. More... | |

| virtual void | collCommAllreduceCustom (ReduceType type) |

| has to call allreduceCustom method of a CollComm class (none in sequential version) More... | |

| virtual void | collCommScanSum () |

| has to call scanSum method of a CollComm class (none in sequential version) More... | |

| virtual void | collCommBroadcast (int root=0) |

| has to call broadcast method of a CollComm class (none in sequential version) More... | |

| virtual std::vector< int > | getNeighbourRanks () |

| virtual std::vector< int > | getNeighbourRanksFullShell () |

| virtual std::vector< std::vector< std::vector< int > > > | getAllRanks () |

| virtual MPI_Comm | getCommunicator () |

| virtual size_t | getTotalSize () override |

| virtual void | printSubInfo (int offset) override |

| virtual std::string | getName () override |

| virtual void | printCommunicationPartners (std::string filename) const |

Protected Member Functions | |

| void | addLeavingMolecules (std::vector< Molecule > &&invalidMolecules, ParticleContainer *moleculeContainer) |

| void | handleDomainLeavingParticles (unsigned dim, ParticleContainer *moleculeContainer) const |

| void | handleDomainLeavingParticlesDirect (const HaloRegion &haloRegion, ParticleContainer *moleculeContainer, std::vector< Molecule > &invalidParticles) const |

| void | handleForceExchange (unsigned dim, ParticleContainer *moleculeContainer) const |

| Does the force exchange for each dimension. Will be called for dim=0, 1 and 2. More... | |

| virtual void | handleForceExchangeDirect (const HaloRegion &haloRegion, ParticleContainer *moleculeContainer) const |

| void | populateHaloLayerWithCopies (unsigned dim, ParticleContainer *moleculeContainer) const |

| void | populateHaloLayerWithCopiesDirect (const HaloRegion &haloRegion, ParticleContainer *moleculeContainer, bool positionCheck=true) const |

Protected Attributes | |

| int | _rank |

| the id of the current process | |

| int | _numProcs |

| total number of processes in the simulation | |

Friends | |

| class | NeighbourCommunicationScheme |

| class | IndirectNeighbourCommunicationScheme |

| class | DirectNeighbourCommunicationScheme |

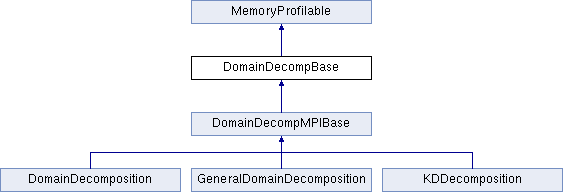

Detailed Description

handle boundary region and multiple processes

This program is designed to run on a HPC (High Performance Computer). But sometimes one might want to execute it on a single processor, possibly even without having MPI installed on that machine. One way to allow this would be to have two different versions of the program, one sequential version and one parallel version. But that isn't feasible, as it is hardly possible to keep them both up to date without investing a lot of additional time. Before describing how this problem is solved, you'll have to know a little bit about how the parallel version works.

At the moment, domain decomposition is used. The basic idea is, that the region which is simulated (usually a cube) is divided into several smaller regions. Each process works on one of those regions. But as the molecules move between regions, the processes have to communicate to exchange molecules. Also for the calculation of some values (e.g. macroscopic values), communication is necessary. Each time processes need to communicate, a method of this interface (that means a method of a class implementing this interface) is called, which then somehow does the communication.

Assume you have an class which implements this interface and which is capable of doing all the necessary stuff for the parallelization. Further assume that you have a second class which also implements this interface which is only capable of handling one process but doesn't need any MPI (with only one process, there is no need for message passing between processes). So the main program (or in this case the class Simulation) can decide which implementation to use. When MPI is available, the parallel version is used, otherwise the sequential version

Member Function Documentation

◆ assertDisjunctivity()

|

virtual |

Reimplemented in DomainDecompMPIBase.

◆ assertIntIdentity()

|

virtual |

checks identity of random number generators

Reimplemented in DomainDecompMPIBase.

◆ balanceAndExchange()

|

virtual |

balance the load (and optimize communication) and exchange boundary particles

This method is used to perform a new decomposition of the global domain with the goal of getting the equal load (and possibly additional goals) on each process. This method is then also responsible for redistributing all particles, so after the method was called, each process has a domain with all particles belonging to this domain (as if exchangeParticles was called after the new decomposition).

- Parameters

-

balance if true, a rebalancing is forced; otherwise automatic balancing of Decomposition is applied moleculeContainer needed for calculating load and to get the particles domain is e.g. needed to get the size of the local domain

Reimplemented in DomainDecomposition, GeneralDomainDecomposition, and KDDecomposition.

◆ barrier()

|

virtual |

synchronizes all processes

Reimplemented in DomainDecompMPIBase.

◆ collCommAllreduceCustom()

|

virtual |

has to call allreduceCustom method of a CollComm class (none in sequential version)

Reimplemented in DomainDecompMPIBase.

◆ collCommAllreduceSum()

|

virtual |

has to call allreduceSum method of a CollComm class (none in sequential version)

Reimplemented in DomainDecompMPIBase.

◆ collCommAllreduceSumAllowPrevious()

|

virtual |

has to call allreduceSum method of a CollComm class (none in sequential version), allows for values of previous iteration.

Reimplemented in DomainDecompMPIBase.

◆ collCommAppendDouble()

|

virtual |

has to call appendDouble method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommAppendFloat()

|

virtual |

has to call appendFloat method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommAppendInt()

|

virtual |

has to call appendInt method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommAppendLongDouble()

|

virtual |

has to call appendLongDouble method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommAppendUnsLong()

|

virtual |

has to call appendUnsLong method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommBroadcast()

|

virtual |

has to call broadcast method of a CollComm class (none in sequential version)

Reimplemented in DomainDecompMPIBase.

◆ collCommFinalize()

|

virtual |

has to call finalize method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommGetDouble()

|

virtual |

has to call getDouble method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommGetFloat()

|

virtual |

has to call getFloat method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommGetInt()

|

virtual |

has to call getInt method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommGetLongDouble()

|

virtual |

has to call getLongDouble method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommGetUnsLong()

|

virtual |

has to call getUnsLong method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommInit()

|

virtual |

has to call init method of a CollComm class

Reimplemented in DomainDecompMPIBase.

◆ collCommScanSum()

|

virtual |

has to call scanSum method of a CollComm class (none in sequential version)

Reimplemented in DomainDecompMPIBase.

◆ exchangeForces()

|

virtual |

Exchanges forces at the domain boundaries if it's required by the cell container.

- Parameters

-

moleculeContainer The particle container domain

Reimplemented in DomainDecompMPIBase.

◆ exchangeMolecules()

| void DomainDecompBase::exchangeMolecules | ( | ParticleContainer * | moleculeContainer, |

| Domain * | domain | ||

| ) |

exchange molecules between processes

molecules which aren't in the domain of their process any more are transferred to their neighbours. Additionally, the molecules for the halo-region are transferred. This implementation is the one used in sequential mode.

- Parameters

-

moleculeContainer needed to get those molecules which have to be exchanged domain is e.g. needed to get the size of the local domain

◆ getBoundingBoxMax()

|

virtual |

get the maximum of the bounding box of this process' domain in the given dimension (0,1,2)

- Parameters

-

dimension coordinate direction for which the maximum of the bounding box is returned domain here the bounding box is stored

Reimplemented in DomainDecomposition, GeneralDomainDecomposition, and KDDecomposition.

◆ getBoundingBoxMin()

|

virtual |

get the minimum of the bounding box of this process' domain in the given dimension (0,1,2)

- Parameters

-

dimension coordinate direction for which the minimum of the bounding box is returned domain here the bounding box is stored

Reimplemented in DomainDecomposition, GeneralDomainDecomposition, and KDDecomposition.

◆ getBoundingBoxMinMax()

| void DomainDecompBase::getBoundingBoxMinMax | ( | Domain * | domain, |

| double * | min, | ||

| double * | max | ||

| ) |

get the minimum and maximum coordinate of the bounding box of this process' domain

- Parameters

-

domain min lower coordinate of the bounding box max upper coordinate of the bounding box

◆ getIOCutoffRadius()

| double DomainDecompBase::getIOCutoffRadius | ( | int | dim, |

| Domain * | domain, | ||

| ParticleContainer * | moleculeContainer | ||

| ) |

returns an cutoff radius for a dimension for a global linked cells datastructure

This method is e.g. used for the parallelCheckpointWriter, which builds a new global cell structure. This method returns a cutoff radius, so that each cell is fully contained in one process

- Parameters

-

dim the dimension, which will be returned domain e.g. needed to get the bounding boxes moleculeContainer e.g. needed for the cutoff radius

◆ getName()

|

inlineoverridevirtual |

Implements MemoryProfilable.

◆ getNeighbourRanksFullShell()

|

inlinevirtual |

Reimplemented in DomainDecomposition.

◆ getNonBlockingStageCount()

|

virtual |

Specifies the amount of non-blocking stages, when performing overlapping balanceAndExchange and computation. For a communication scheme, where only direct neighbours communicate, 3 stages of communication are necessary, since the particles have to be transmitted in the x-direction first, then in the y-direction, then in the z-direction.

- Returns

- The amount of communication stages. Returns -1 if it is not possible.

Reimplemented in DomainDecompMPIBase.

◆ getNumProcs()

|

virtual |

returns the number of processes

- Returns

- number of processes

◆ getRank()

|

virtual |

returns the own rank

- Returns

- rank of the process

◆ getTotalSize()

|

inlineoverridevirtual |

Implements MemoryProfilable.

◆ handleDomainLeavingParticles()

|

protected |

Handles the sequential version of particles leaving the domain. Also used as a fall-back for the MPI variant if a process spans an entire dimension.

- Parameters

-

dim moleculeContainer

◆ handleDomainLeavingParticlesDirect()

|

protected |

Handles the sequential version of particles leaving the domain in a direct communication pattern. Hereby all particles will be moved directly to the specific region without intermediary copies. x, y and z closely resemble the offset of the current region.

- Parameters

-

x -1, 0 or 1 y -1, 0 or 1 z -1, 0 or 1 moleculeContainer invalidParticles used if moleculeContainer->isInvalidParticleReturner() is true

◆ handleForceExchange()

|

protected |

Does the force exchange for each dimension. Will be called for dim=0, 1 and 2.

- Parameters

-

dim The dimension (0,1 or 2) moleculeContainer The particle container

◆ handleForceExchangeDirect()

|

protectedvirtual |

Does the force exchange for each direction.

- Parameters

-

haloRegion moleculeContainer

◆ Ndistribution()

|

virtual |

returns total number of molecules

Reimplemented in DomainDecompMPIBase.

◆ printDecomp()

|

virtual |

writes information about the current decomposition into the given file

- Parameters

-

filename name of the file into which the data will be written domain e.g. needed to get the bounding boxes particleContainer needed to print information about the parts of the decomposition

Reimplemented in DomainDecompMPIBase.

◆ printSubInfo()

|

inlineoverridevirtual |

Implements MemoryProfilable.

◆ procOwnsPos()

|

finalvirtual |

find out whether the given position belongs to the domain of this process

This method is e.g. used by a particle generator which creates particles within the bounding box (cuboid) of a local domain, but only those particles which really belong to the domain (which might not be cuboid) are allowed to be really used.

- Parameters

-

x x-coordinate of the position to be checked y y-coordinate of the position to be checked z z-coordinate of the position to be checked domain might be needed to get the bounding box

◆ queryBalanceAndExchangeNonBlocking()

|

virtual |

Checks whether the balance and exchange step can be performed non-blocking.

A non-blocking behaviour is typically possible, as long as no rebalancing has to be done.

- Parameters

-

forceRebalancing if true, a rebalancing is forced; otherwise automatic balancing of Decomposition is applied moleculeContainer needed for calculating load and to get the particles domain is e.g. needed to get the size of the local domain

Reimplemented in DomainDecomposition, GeneralDomainDecomposition, and KDDecomposition.

◆ readXML()

|

virtual |

Reimplemented in DomainDecompMPIBase, DomainDecomposition, GeneralDomainDecomposition, and KDDecomposition.

◆ writeMoleculesToFile()

| void DomainDecompBase::writeMoleculesToFile | ( | const std::string & | filename, |

| ParticleContainer * | moleculeContainer, | ||

| bool | binary = false |

||

| ) | const |

appends molecule data to the file. The format is the same as that of the input file If MPI is enabled and binary files are supposed to be written this function will call writeMoleculesToMPIFileBinary(). Otherwise, to ensure that not more than one process writes to the file at any time, there is a loop over all processes with a barrier in between.

- Parameters

-

filename name of the file into which the data will be written moleculeContainer all Particles from this container will be written to the file binary flag, that is true if the output shall be binary

◆ writeMoleculesToMPIFileBinary()

| void DomainDecompBase::writeMoleculesToMPIFileBinary | ( | const std::string & | filename, |

| ParticleContainer * | moleculeContainer | ||

| ) | const |

appends molecule data to the file. The format is the same as that of the input file This version uses, MPI IO.

- Parameters

-

filename name of the file into which the data will be written moleculeContainer all Particles from this container will be written to the file

The documentation for this class was generated from the following files:

- src/parallel/DomainDecompBase.h

- src/parallel/DomainDecompBase.cpp